On April 14, Xpeng Motors held an AI Technology Sharing Session in Hong Kong and officially revealed its latest research progress: a 72-billion-parameter large-scale foundation model for autonomous driving, named the “Xpeng Foundation Model.” This model will not only serve as the intelligent “brain” of Xpeng vehicles but will also be deployed to edge devices such as cars through cloud-based distillation. It will further empower Xpeng’s AI robots and flying vehicles.

Three Core Capabilities: Vision, Reasoning, and Motion

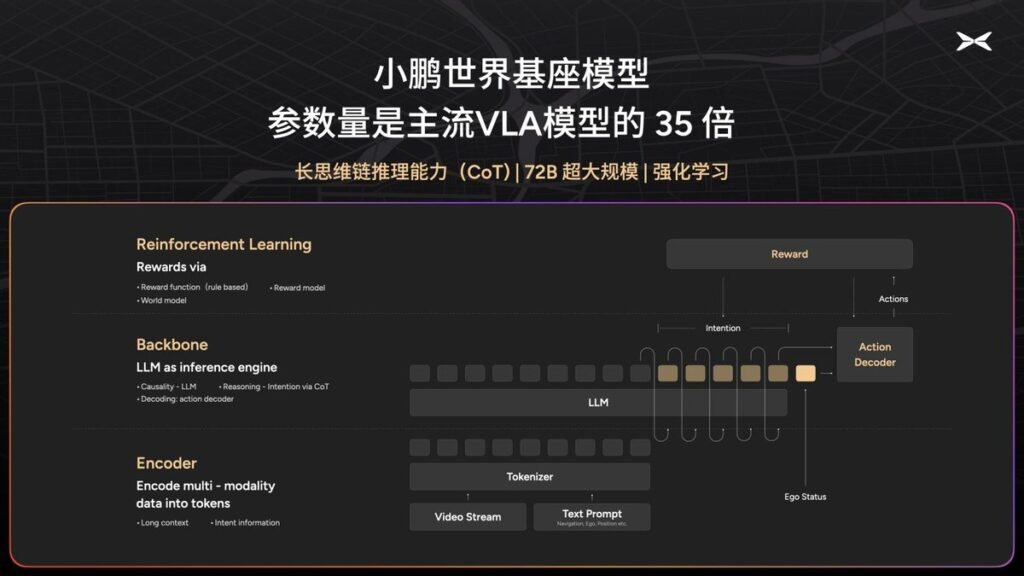

According to Li Liyun, Head of Autonomous Driving at Xpeng Motors, the foundation model is built upon a large language model (LLM) architecture and trained using vast amounts of high-quality driving data. It features three core capabilities: visual understanding, chain-of-thought (CoT) reasoning, and motion generation. Through reinforcement learning, the model continuously evolves and is expected to eventually develop autonomous driving skills that rival or surpass human drivers.

Xpeng Foundation Model: Scale and Reasoning Power

With 72 billion parameters, the Xpeng Foundation Model is about 35 times larger than mainstream Vision-Language-Action (VLA) models. Its standout advantage lies in its CoT reasoning ability, enabling it to perform complex common-sense reasoning like humans and convert reasoning results into actionable commands, such as steering and braking, for effective interaction with the physical world.

Building China’s First Cloud-Based Model Factory

To support development, Xpeng built a “cloud-based model factory” from the ground up and launched the automotive industry’s first 10,000-GPU intelligent computing cluster in China. This cluster delivers 10 EFLOPS of computing power with sustained operational efficiency above 90%. The average end-to-end iteration cycle from cloud to vehicle has been reduced to just five days. Thanks to Xpeng’s in-house data infrastructure, data upload capacity has increased by 22 times, training data bandwidth by 15 times, and model training speed by 5 times.

Three Key Milestones Shared

During the event, Xpeng highlighted three key milestones:

- Validation of the scaling law in the autonomous driving domain

- Successful implementation of the foundation model for vehicle control in post-installed computing units

- Launch of 72B-parameter model training under a reinforcement learning framework

Scaling Law Proven in Autonomous Driving

For the first time, Xpeng’s team experimentally validated the applicability of the “scaling law” in autonomous driving — demonstrating that larger models trained on more data yield stronger performance. Leveraging legacy experience from the rule-based era, they also designed effective reward functions for reinforcement learning, turning rule-based insights into productive training strategies.

Developing the “World Model” for Real-Time Simulation

In addition, Xpeng has started developing a “World Model” as a key component of the cloud model factory. This system simulates real-world environments using action signals and provides real-time feedback to support the evolution of the foundation model through closed-loop optimization.

Ambition to Lead the Physical AI Era

Chairman He Xiaopeng stated: “Our goal is to become the world’s top physical AI foundation model provider, driving transformative change across cars, robots, and flying vehicles.” Xpeng plans to reveal more technical details about the model’s development and training at the upcoming CVPR conference in June.