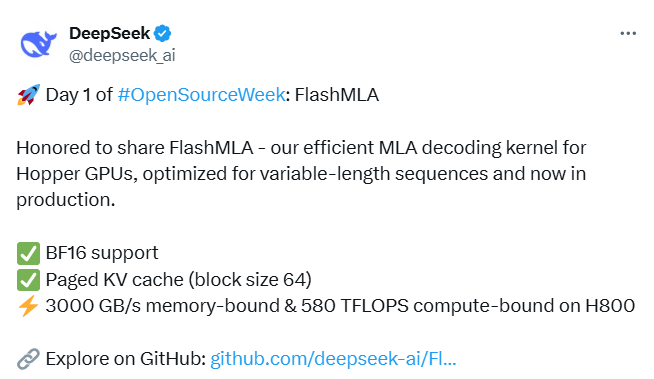

On February 24, DeepSeek kicked off “Open Source Week” by releasing its first codebase, FlashMLA. This efficient MLA (Multi-Layer Attention) decoding kernel is optimized for the Hopper GPU and specifically designed to process variable-length sequences. It is now in active production. “On the H800, it achieves 3000GB/s memory bandwidth and 580TFLOPS of computing performance,” DeepSeek announced.

MLA (Multi-Layer Attention) is an enhanced attention mechanism designed to improve the efficiency and performance of Transformer models when handling long sequences. By utilizing parallel computations across multiple attention heads, MLA enables the model to focus on different positions and semantic levels within the text simultaneously. This allows the model to better capture long-distance dependencies and complex semantic structures in a more comprehensive and deeper manner.