On April 8, StepFun unveiled its latest multimodal reasoning model, Step-R1-V-Mini, which supports image-and-text input with text-based output. The model demonstrates strong instruction-following capabilities and general-purpose reasoning skills, capable of accurately perceiving complex visual content and completing advanced inference tasks. It is now accessible via the StepFun AI web platform, and an API is available to developers through its open platform.

Exceptional Visual Perception with Transparent Reasoning

Step-R1-V-Mini delivers powerful visual understanding and reasoning abilities. It can accurately detect fine-grained visual details and follow user prompts to perform deep analysis. For example, when presented with an image of a homemade dish and asked how to prepare it, the model can identify ingredients and condiments used—such as “300g shrimp, 2 scallion whites”—and provide a step-by-step cooking guide.

One standout feature is the model’s transparent reasoning chain, allowing users to trace the logic behind each answer, greatly enhancing interpretability and trust in AI-driven results.

Top Rankings in Visual Reasoning Benchmarks

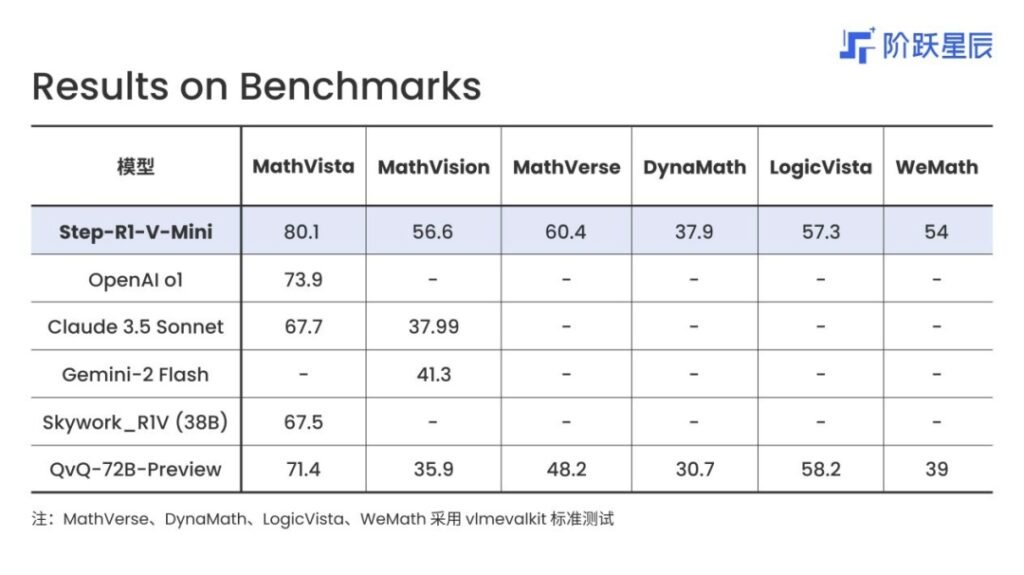

The model has achieved impressive results across several public benchmarks. It ranks first in China on the MathVision leaderboard for visual reasoning, and performs strongly in areas including visual question answering, mathematical logic, and code understanding.

A Strategic Step Toward Intelligent Agents

StepFun has previously released models such as Step-1V, Step-1.5V, and the language reasoning model Step-R-mini. These models have consistently ranked highly on both domestic and international benchmarks like LMSYS and OpenCompass.

StepFun founder and CEO Jiang Daxin has emphasized that multimodality and reasoning are two essential components for building intelligent agents. The launch of Step-R1-V-Mini reflects the company’s strategic focus in 2025 on advancing AI-powered terminal agents.