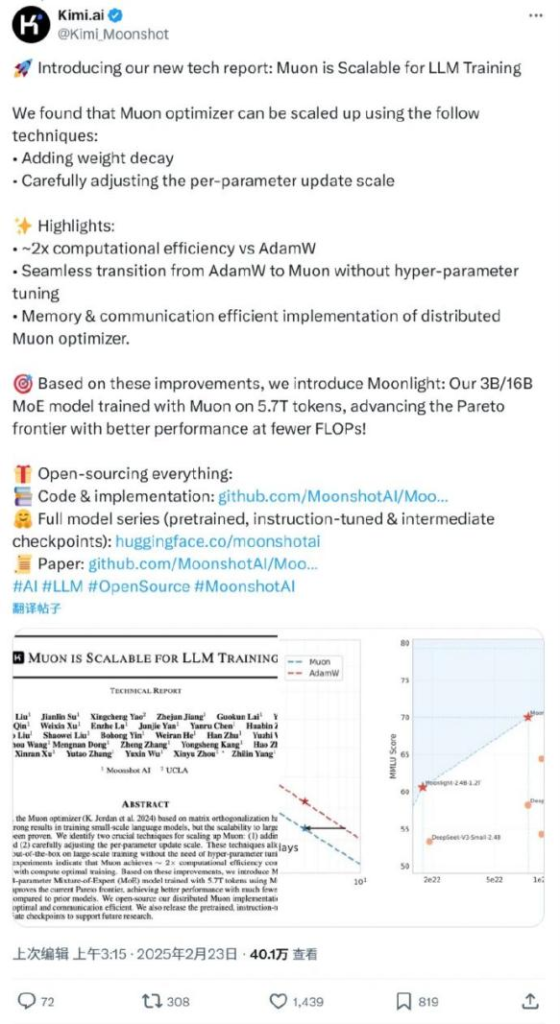

On February 24, Moonshot AI released a new technology report titled “Muon for Scalable LLM Training” and announced the launch of “Moonlight,” a mixture-of-experts (MoE) model trained on Muon. The model features 3 billion and 16 billion parameters, utilizing 5.7 trillion tokens. It achieves better performance with fewer floating point operations (FLOPs), thus pushing the Pareto efficiency frontier further.