In the fierce global competition for AI large language models, Chinese innovation is shining brightly. After DeepSeek, Alibaba Cloud’s Tongyi Qianwen has emerged as another rising star, demonstrating China’s exceptional AI strength to the world.

On the first day of the Chinese New Year, Alibaba Cloud’s Tongyi team made a significant announcement with the launch of its flagship model, Qwen2.5-Max. This model caused a massive stir in the AI field, signaling that Tongyi Qianwen has firmly positioned itself among the top global language models. It has become the second Chinese language model capable of competing with OpenAI’s o1 series.

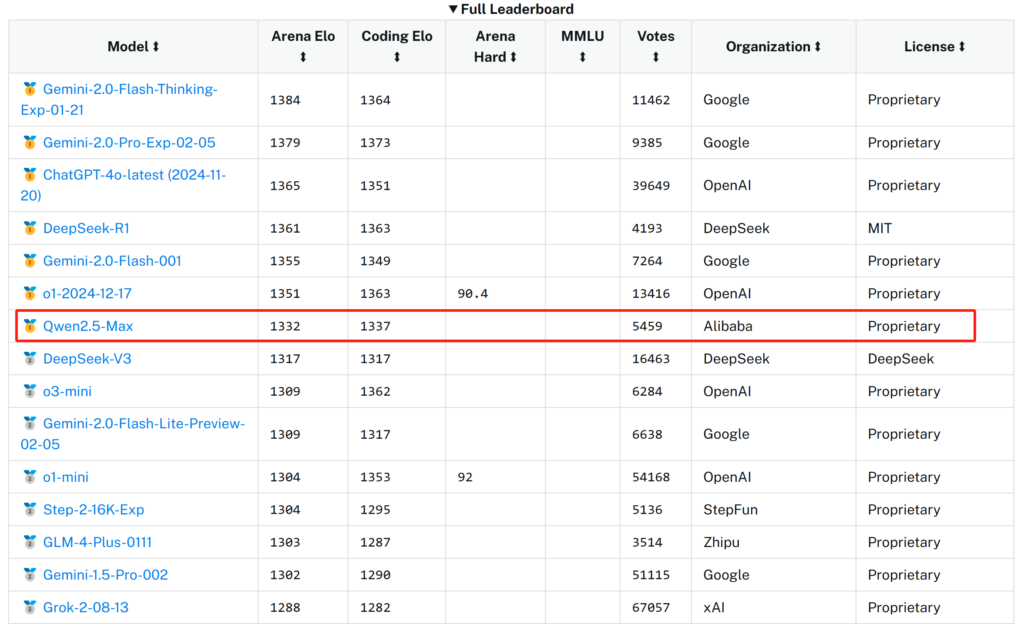

According to the authoritative benchmarking platform LMArena and the “ChatBot Arena LLM” blind test leaderboard, Qwen2.5-Max earned an impressive score of 1332, ranking 7th overall as of February 2. This leap puts it ahead of DeepSeek’s DeepSeek-V3 and OpenAI’s o1-mini. In particular, Qwen2.5-Max excelled in the challenging areas of mathematics and programming, clinching the top spot in these categories and securing 2nd place in Hard prompts.

The ChatBot Arena LLM leaderboard, created by UC Berkeley’s Sky Computing Lab and LMArena, has gained widespread recognition in the industry. Its innovative user blind-testing mode provides comprehensive assessments across various capabilities, such as conversation, code generation, image-text generation, and web development. With over 2.6 million votes, it offers a clear ranking of 197 models based on real-world usage. Among the top ten models, several OpenAI versions like ChatGPT-4o and Google’s Gemini-2.0, as well as xAI’s Grok-2, are high performers. However, China’s open-source models have made a striking impact. DeepSeek-R1 and ChatGPT-4o-latest are tied for 3rd, while Alibaba’s Qwen-max-2025-01-25 ( Qwen2.5-Max) ranks 7th. DeepSeek-V3 and GLM-4-Plus-0111 are ranked 8th and 9th, respectively. With half of the top 10 spots held by Chinese models, this is a strong testament to the global competitiveness of China’s AI teams.

Looking back, Alibaba Cloud’s Tongyi models had already topped global open-source rankings in June and September of last year. Though they hadn’t fully surpassed closed-source models at that time, the release of Qwen2.5-Max on January 29 this year marked a powerful comeback. The model employs a massive Mixture of Experts (MoE) architecture, akin to a master with numerous experts at its disposal, dynamically allocating resources based on the task at hand. With training data exceeding 20 trillion tokens, it has excelled in key benchmark tests like MMLU-Pro (University-level knowledge tests), LiveCodeBench (programming), LiveBench (comprehensive evaluation), and Arena-Hard (human-preference alignment).

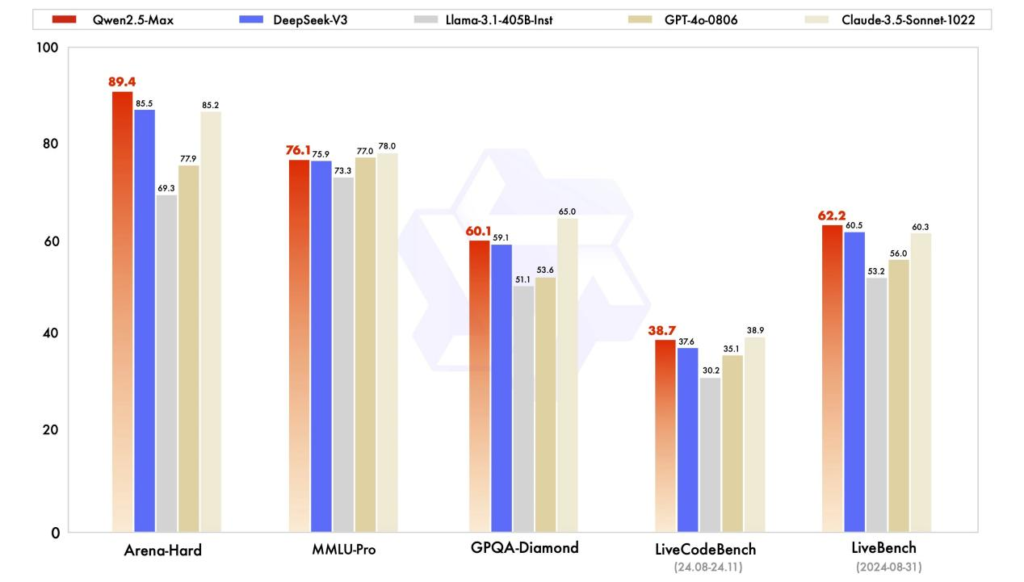

In the challenging Arena-Hard benchmark, which tests complex instructions and multi-round conversations, Qwen2.5-Max performed exceptionally well. Despite the strict evaluation by foreign expert teams, Qwen2.5-Max quickly grasped the essence of questions, integrating its vast knowledge to provide comprehensive and accurate answers, scoring 89.4 and surpassing formidable competitors such as DeepSeek-V3, Llama-3.1-405B-Inst, GPT-4o-0806, and Claude-3.5-Sonnet-1022.

In comparison tests, Alibaba Cloud’s Tongyi team, unable to compete with the core models of GPT-4o and Claude-3.5-Sonnet, instead pitted Qwen2.5-Max against leading open-source MoE models such as DeepSeek V3, the largest open-source dense model Llama-3.1-405B, and their own Qwen2.5-72B. In all 11 benchmark tests, Qwen2.5-Max emerged victorious. ChatBot Arena praised it for its “strong performance in several fields, particularly in specialized technical tasks like programming, mathematics, and hard prompts.”

A New Era of AI

The birth of Qwen2.5-Max is not coincidental. It is powered by an intricate and sophisticated algorithmic structure. Built on the large-scale MoE architecture, the model resembles a knowledge factory, with expert networks specializing in different tasks. When faced with input, the model can intelligently allocate the task to the most suitable expert module, then integrate the diverse results to enhance its knowledge processing capabilities. This is all supported by a strong Transformer architecture, which offers superior parallel computation and long-sequence handling, excelling in semantic understanding and context correlation.

With over 20 trillion tokens of pre-training data and fine-tuned with SFT (Supervised Fine-Tuning) and RLHF (Reinforcement Learning with Human Feedback), the model not only learns rich language patterns but also adapts to specific tasks, achieving qualitative leaps in aligning with human preferences and generating content that meets user expectations.

The Future of Qwen2.5-Max: Boundless Potential

The journey for Qwen2.5-Max is just beginning, and its application potential is vast. In the field of intelligent office solutions, it will become an invaluable assistant for professionals, whether for rapidly generating business documents, proofreading and optimizing content, or transcribing meeting points and offering decision suggestions. In education, it can act as a smart tutor, tailoring teaching plans to students’ learning rhythms, answering questions, and aiding teachers in creating course materials and designing tests. In the medical field, it will assist doctors in analyzing medical images, providing diagnostic suggestions, and offering health advice to patients, helping alleviate medical resource shortages. In intelligent customer service, it can be seamlessly integrated into multi-channel platforms, responding to user inquiries instantly, accurately routing tickets, and assisting customer service staff in resolving issues efficiently.

The rapid development of Alibaba Cloud’s Tongyi Qianwen is a microcosm of the rise of China’s AI industry. Through technology and innovation, it has painted a magnificent picture that stands shoulder to shoulder with global leaders. This marks a new era of AI that is set to revolutionize industries across the globe, injecting new momentum into the transformation of countless sectors.